In comparison lets look at the model performing worst: robustTopics. Only 'know' and 'goals' are inconsistent. Most of the words are in all of the three re-initializations. display_sample_topic_consistency ( model_id = 1, sample_id = 0 ) # Output: Words in 3 out of 3 runs : Words in 1 out of 3 runs : The next step is to look at the model on a word level. The top model is the NMF model with 27 topics (model_id 1 and sample_id 0). We start by looking at the ranking of all models robustTopics. load_sklearn_model ( NMF, tf, tf_vectorizer, dimension_range =, n_samples = 4, n_initializations = 3 ) # Fit the models - Warning, this might take a lot of time based on the number of samples (n_models*n_sample*n_initializations) robustTopics. load_sklearn_model ( LatentDirichletAllocation, tf, tf_vectorizer, dimension_range =, n_samples = 4, n_initializations = 3 ) robustTopics. get_feature_names () # TOPIC MODELLING robustTopics = RobustTopics ( nlp ) # Load NMF and LDA models robustTopics. fit_transform ( documents ) tf_feature_names = tf_vectorizer. load ( "en_core_web_md" )ĭetect robust sklearn topic models from sklearn.feature_extraction.text import TfidfVectorizer, CountVectorizer from composition import LatentDirichletAllocation, NMF from robics import RobustTopics # Document vectorization using TFIDF tf_vectorizer = CountVectorizer ( max_df = 0.95, min_df = 2, stop_words = 'english' ) tf = tf_vectorizer. Load word vectors used for coherence computation import spacy nlp = spacy. Test dataset from sklearn from sklearn.datasets import fetch_20newsgroups # PREPROCESSING dataset = fetch_20newsgroups ( shuffle = True, random_state = 1, remove = ( 'headers', 'footers', 'quotes' )) # Only 1000 dokuments for performance reasons documents = dataset.

SKLEARN LDA COHERENCE SCORE INSTALL

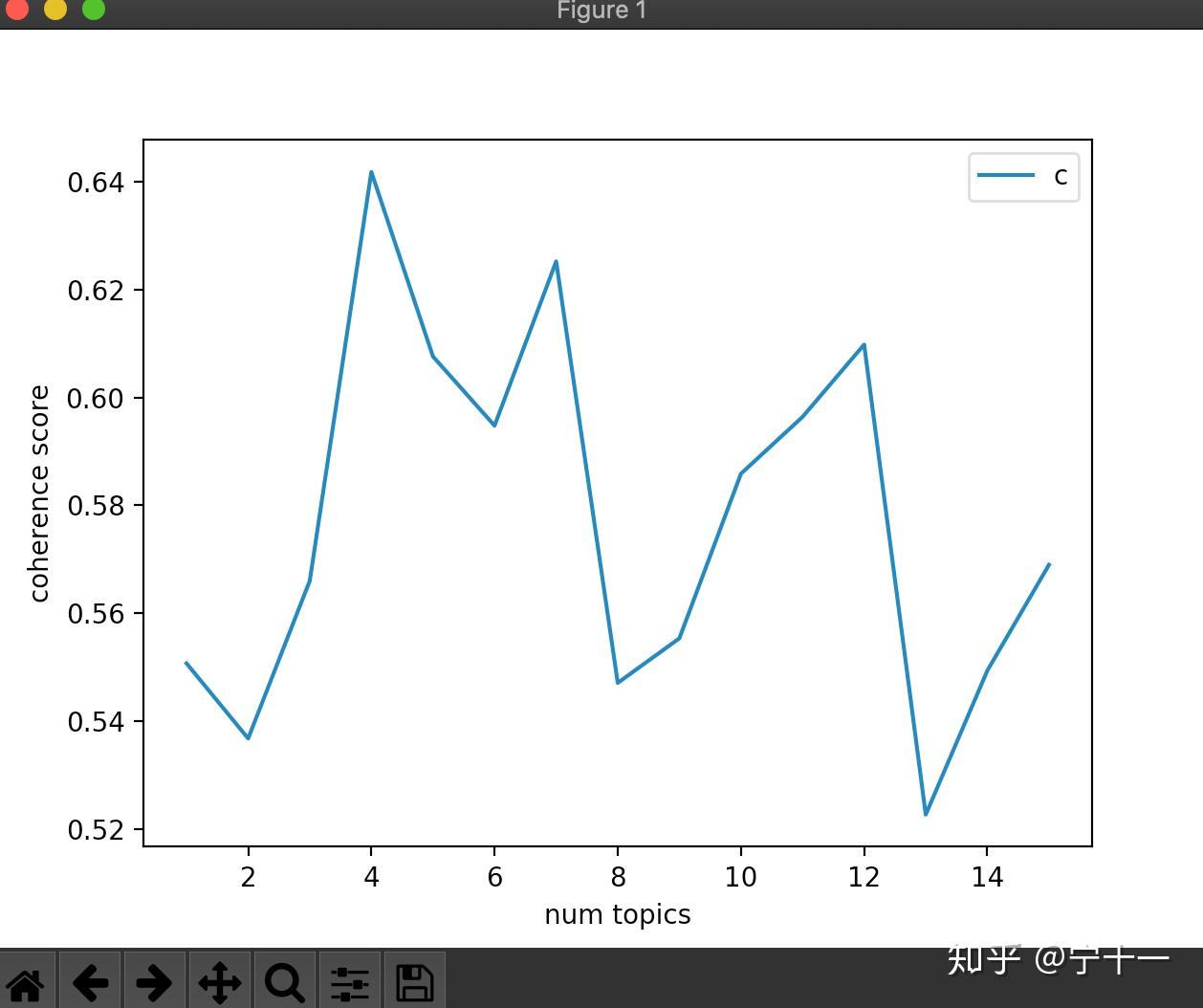

Using pip, robics releases are available as source packages and binary wheels: pip install robics Word based analysis of samples and topic model instances.Word vector based coherence score (simple version of the TC-W2V).Ranking correlation of the top n words based on Kendall's Tau.Similarity of topic distributions based on the Jensen Shannon Divergence.Jaccard distance of the top n words for each topic.Ranking of all models based on four metrics:.Simple topic matching between the different re-initializations for each sample using word vector based coherence scores.Creates samples based on the sobol sequence which requires less samples than grid-search and makes sure the whole parameter space is used which is not sure in random-sampling.Supports sklearn (LatentDirichletAllocation, NMF) and gensim (LdaModel, ldamulticore, nmf) topic models.The main goal is to provide a simple to use framework to check ifĪ topic model reaches each run the same or at least a similar result. RobustTop ics is a library targeted at non-machine learning experts interested in building robust

0 kommentar(er)

0 kommentar(er)